We have designed an odometric system for localizing a walking humanoid robot using standard sensory equipment, i.e., a camera, an Inertial Measurement Unit, joint encoders and foot pressure sensors. Our method has the prediction-correction structure of an Extended Kalman Filter. At each sampling instant, position and orientation of the torso are predicted on the basis of the differential kinematic map from the support foot to the torso, using encoder data from the support joints. The measurements coming from the camera (head position and orientation, actually reconstructed by a V-SLAM algorithm) and the Inertial Measurement Unit (torso orientation) are then compared with their predicted values to correct the estimate. The filter is made aware of the current placement of the support foot by an asynchronous update mechanism triggered by the pressure sensors.

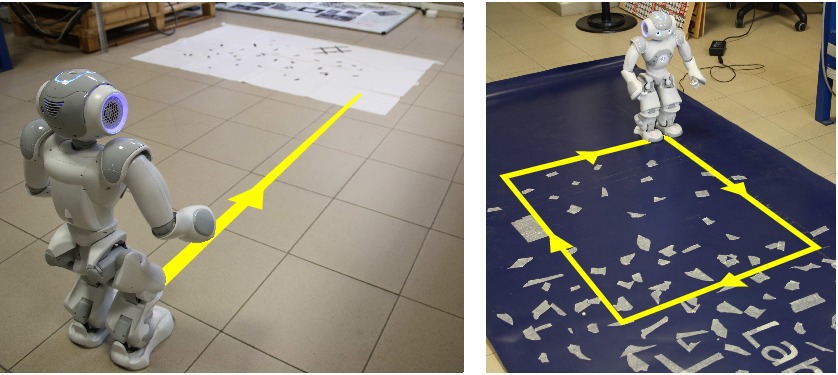

The experimental platform used to validate the proposed odometric localization is the NAO humanoid (version 3.3) developed by Aldebaran Robotics. As a V-SLAM module, we have selected the well-known PTAM algorithm.

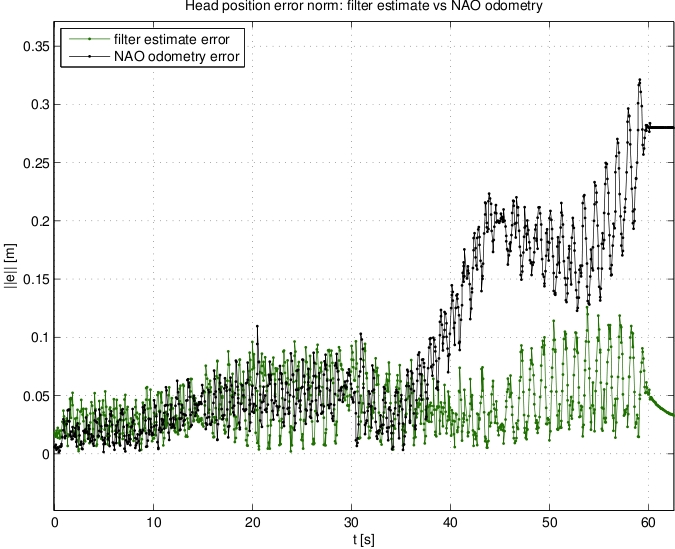

Our experiments begin with an initialization phase, in which NAO performs a sideways motion on the spot to collect visual information and allow PTAM to recover the metric scale. After that, motion commands are sent to the robot using the NAO APIs, and in particular the setWalkTargetVelocity function. This is an open-loop command, and therefore the robot will soon veer off the nominal course due to non-idealities (most importantly, foot slippage). A camera mounted on the laboratory ceiling records the whole experiment and is used to generate a ground truth for the coordinates of the head; this is compared with the value of the same coordinates reconstructed using the filter estimate for the torso pose and forward kinematics from the torso to the head. For comparison, we also stored the built-in NAO odometry provided by the getPosition function.

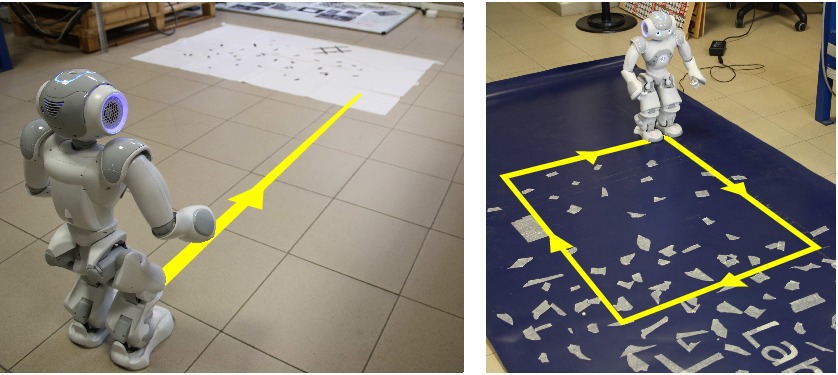

Nominal path: line

In the first localization experiment, the robot is commanded to walk in straight line at a velocity of 0.1 m/s. As shown below, the proposed odometric localization performs rather well, providing an estimate close to the ground truth. The built-in NAO odometry cannot detect the fact that the robot leaves the nominal path. Looking at the raw camera position estimate by PTAM, one may appreciate the benefits of the proposed filter in smoothing this estimate and making it consistent with a humanoid motion.

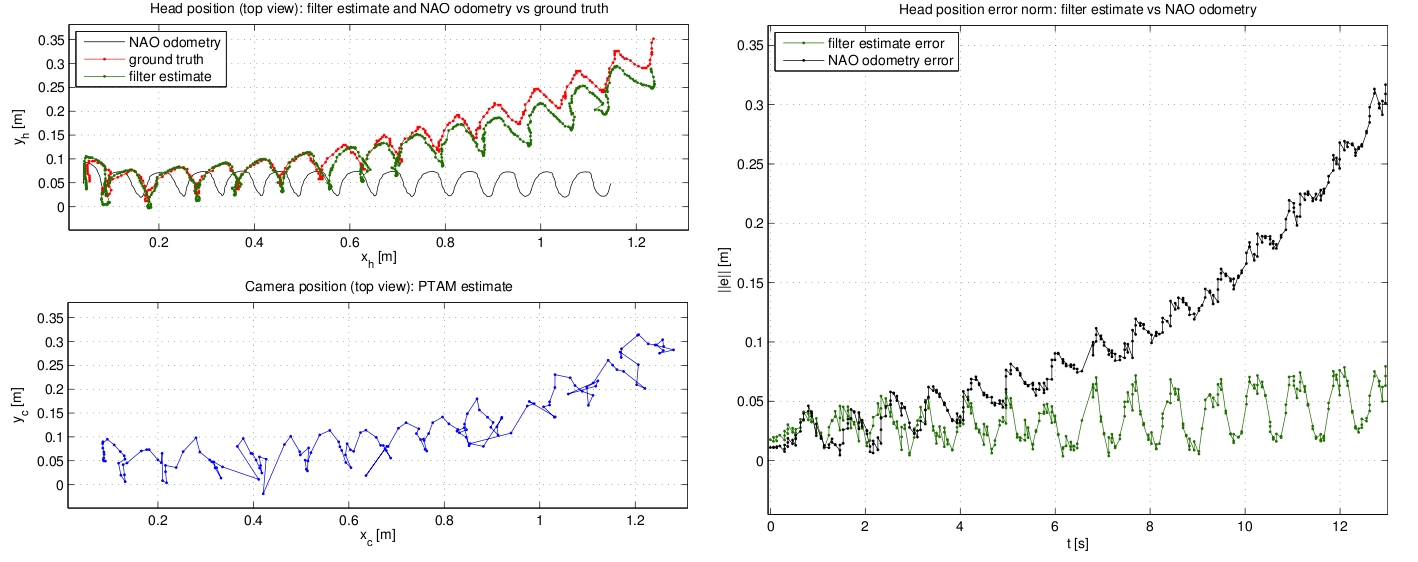

Nominal path: square

In the second localization experiment, the robot is commanded to walk along a square path at 0.1 m/s. The results, shown below, show again that the proposed odometric localization scheme is quite accurate and outperforms the built-in odometry.

Video clip

[1] G. Oriolo, A. Paolillo, L. Rosa and M. Vendittelli, Vision-Based Odometric Localization for Humanoids using a Kinematic EKF. 2012 IEEE-RAS Int. Conf. on Humanoid Robots, Osaka, Japan, Nov-Dec 2012 (pdf).