The method that we show here achieves safe navigation in cluttered environments populated by. We proposed different visual-based controllers, aiming at maximizing the clearance from the images of the surrounding obstacles through the regulation of proper features, while guaranteeing safety during navigation. In obstacle-free conditions, this is equivalent to walk at the center of the corridor. If the passage is too tight to achieve safe navigation by moving as a unicycle, our controllers separately control the gaze direction and the torso of the robot, to allow sideways walking.

For the validation of the proposed navigation algorithm, we used the small humanoid NAO. The images were acquired from a video stream

with a 25 Hz frame rate and a resolution of 320X240 pixels. The image processing was performed on a ROI of 320X120 pixels placed at the

bottom of the image.

We implemented a RANSAC-based dominant plane estimation for the detection of the ground plane in the image. For image processing we

have used the OpenCV library to compute the optical flow, find the image contours and compute the corresponding centroids.

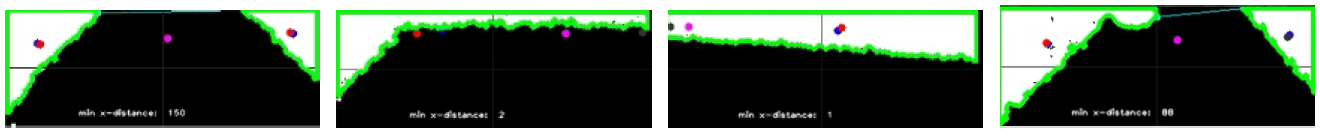

In particular, we used the GPU-based version of the pyramidal Lucas-Kanade optical flow. The following figure shows the relevant

features involved in the control strategy.

Image processing at four particular phases of the four presented simulations. Green curves highlight the contours of the detected obstacles. Blue dots are the corresponding centroids, red dots are the filtered centroids, the purple dot is the middle point. Gray dots simply highlight when left and right centroids are getting close to the image borders.

Results refering to [1].

Simulations and the experiments with NAO, showing performances of 1D-IBVS control, are integrally shown in the video below. Further details can be found in [1].

Results refering to [2].

The comparative analysis among the proposed controllers, and the effectiveness of the control scheme extended with velocity feedback loop, both presented in [2], are shown in the video below.

[1] M. Ferro A. Paolillo, A. Cherubini, and M. Vendittelli, Omnidirectional humanoid navigation in cluttered environments based on optical flow information , 2016 16th IEEE-RAS International Conference on Humanoid Robots, November 15-17, Cancun, Mexico.

[2] M. Ferro A. Paolillo, A. Cherubini, and M. Vendittelli, Vision-based navigation of omnidirectional mobile robots , Submitted to the IEEE Robotics and Automation Letters.