We develop a localization method for a team of heterogeneous robots, made up by a single aerial vehicle (UAV) and multiple ground vehicles (UGVs).

The UAV is airborne, thus is able to provide a top view of the whole group of ground robots, which gives to it a natural supervisory role.

On the other hand, the ground robots are able to provide estimates of their motion through odometry measurements.

We assume that the robots can communicate each other, and the UAV is able to measure the relative positions of the UGVs in its frame of reference, but it is not able to distinguish among the UGVs and measure also their identities (anonymous measurements).

The assumption of unlabelled measurements arises because we assumed that each UGV looks exactly the same from the UAV point of view.

While in normal operation it is usually possible to discriminate among the measured robots (e.g.: applying tagging), there are several adverse environmental conditions (low illumination, smoky environment, etc..) under which the identification of the measured robots may not be feasible or reliable.

To solve the relative localization problem with this additional challenge, we adopted a multi-target tracking technique called Probability Hypothesis Density (PHD) filter.

However, the standard version of this filter does not take into account odometric information coming from the targets (the ground robots), nor does it solve the problem of estimating their identities.

Hence, we design ID-PHD, a modification of the PHD filter that is able to reconstruct the identities of the targets by incorporating odometric data.

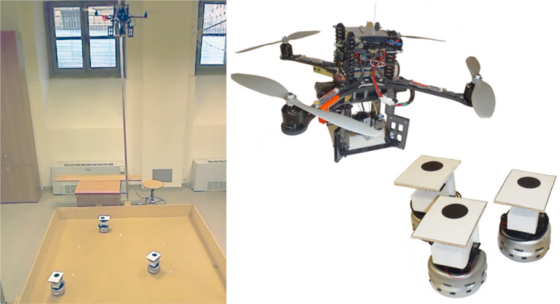

We want to develop a UAV/multi-UGV heterogeneous system for general purpose tasks.

The different capabilities of each robot allow to specify different tasks for each of them. The UAV, flies over the ground robots as supervisor, providing the UGVs relative poses, which are exploited by the UGVs to perform navigation and formation control.

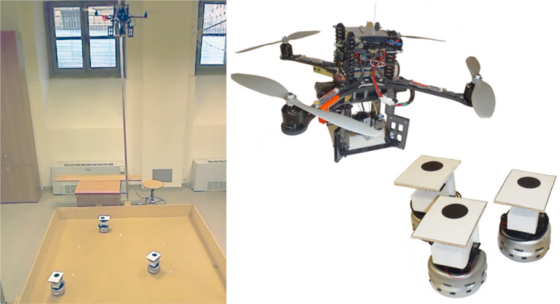

We consider a set of UGVs, whose number is unknown and may vary over time. The following figure describes the system: each robot has a frame attached to its body, and is navigating in the environment, where objects similar to the ground robots might be present and measured by the UAV as possible robots (called false positives)

Given its role, the UAV has a rich sensor equipment:

- an Inertial Measurement Unit, which provides linear acceleration, angular velocities and attitude angles (roll, pitch, and yaw);

- a sonar range-finder, pointing downward to estimate the height from the ground;

- a monocular camera, pointing downward, whose images provide a list of unlabeled features representing the UGVs and estimates of the UAV velocity (via optical flow).

The UGVs equipment is limited to an odometer that measures the robot displacement between two time instants.

All these inputs are fuse together in order to retrieve the identities and relative configurations of the UGVs.

Once those are retrieved, the entire formation is able to perform tasks.

Each task can be represented with an appropriate function, whose Jacobian w.r.t. the robot velocities gives the relation between the robots movement and task accomplishment.

In order to combine the tasks, we used techniques for redundancy system since the entire formation can be seen as a redundant robot.

For each task we define a priority level according to its effect on overall system performances: low-priority tasks must not affect the execution of the high-priority ones.

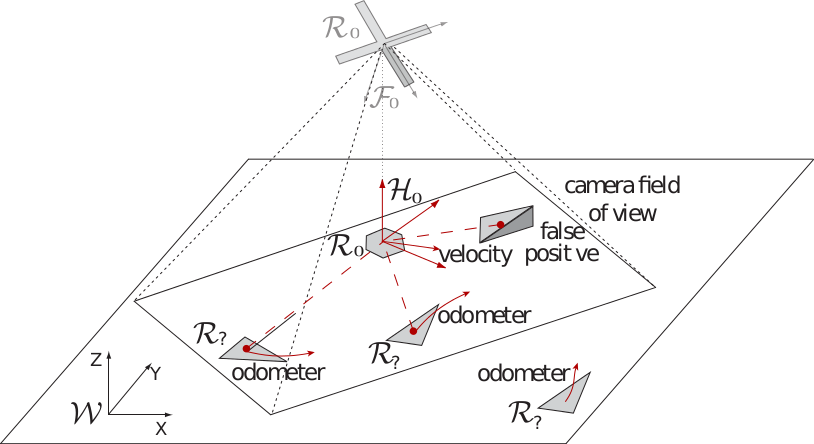

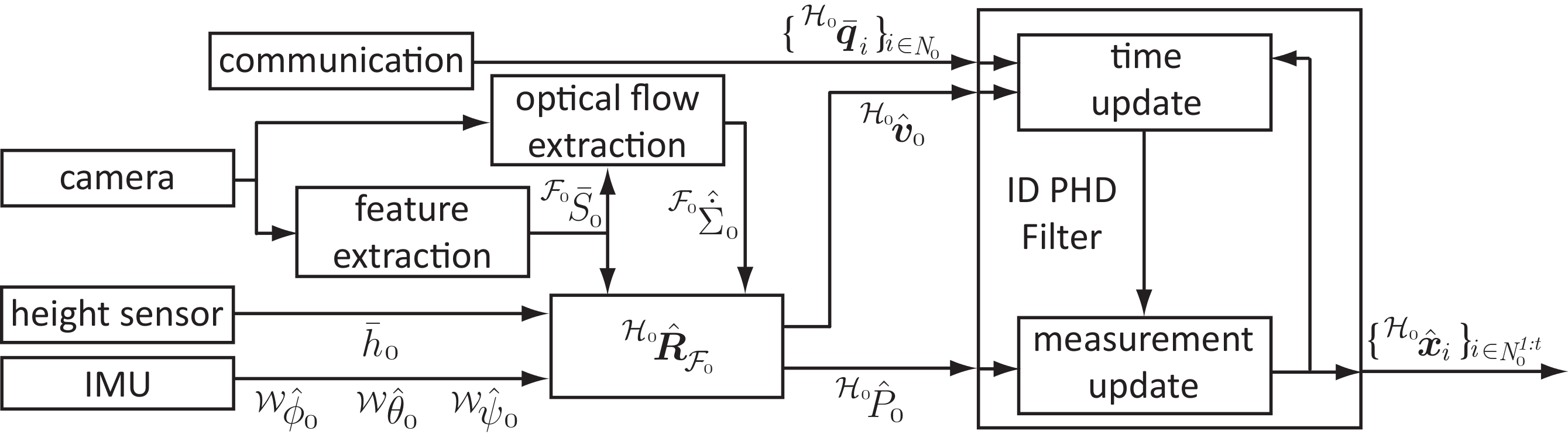

Our framework is depicted in the following figure

From height and features on the image plane we obtain a set of measurements in 3D. The main problem is that they are unlabeled! Our solution employs a PHD filter, a multi-target tracking technique able to track multiple targets. It relies on a two stage prediction/correction scheme using a generic motion model to update the targets. However, it is not able to assign an identity to the tracked targets. Since, in our case, the tracked objects are the UGVs, they are not completely unknown objects, since odometries are known. Our idea is to modify the PHD filter so as it uses the odometries, in order to predict the targets and reconstruct their identities (into the filter update step).

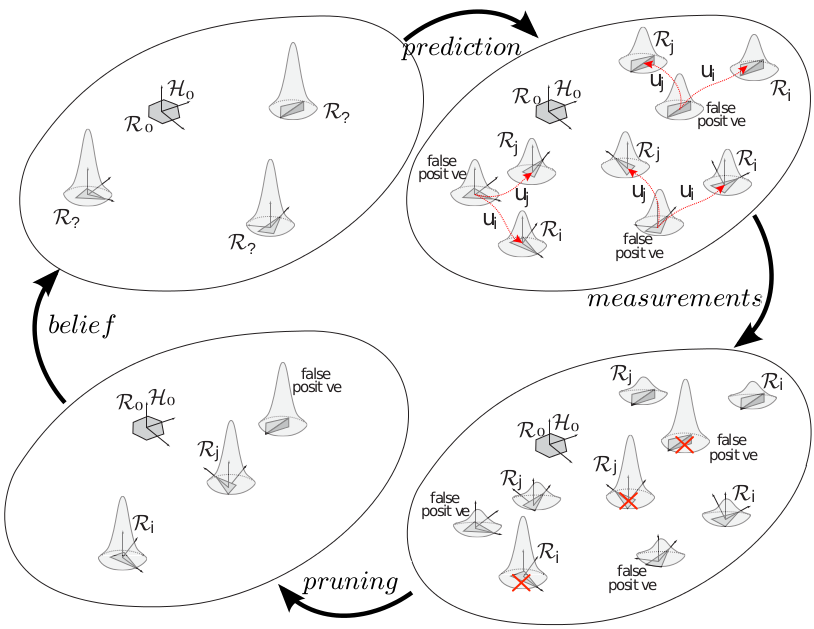

In the following, a little example that explains how our framework is able to retrieve the identities (IDs) of the UGVs:

In the top-left figure, the UAV (depicted with hexagonal shape) has to estimate the identities of the three robots on the ground (depicted with a triangle).

In details, one of them is a false positive (an object that looks like a robot but it is not, in the top-right of the same image).

At the beginning, the UAV is not able to distinguish among the robots and it assigns the same weight (the Gaussian over each robot).

Then, the prediction step is performed and each odometry is applied to each robot, including the zero odometry.

In this way, one takes into account the false positive robots. In this example, three hypotheses are generated for each robot (depicted in the top-right image).

Note: this is the step where an ID is assigned to each hypothesis. In fact, the i-th identity is assigned if the i-th odometry is applied. At the end, each robot has one or more hypotheses.

The actual hypotheses are weighted according to the measurements (red cross in the bottom-right figure), noticeable in the figure with the new heights of the Gaussian functions. As example, the false positive is associated with the zero odometry.

Note that our framework is also able to handle with non-moving robots.

As final step, a prune phase is performed in order to cut off the hypotheses with a weight under a given threshold. A final estimation is finally performed for each robot.

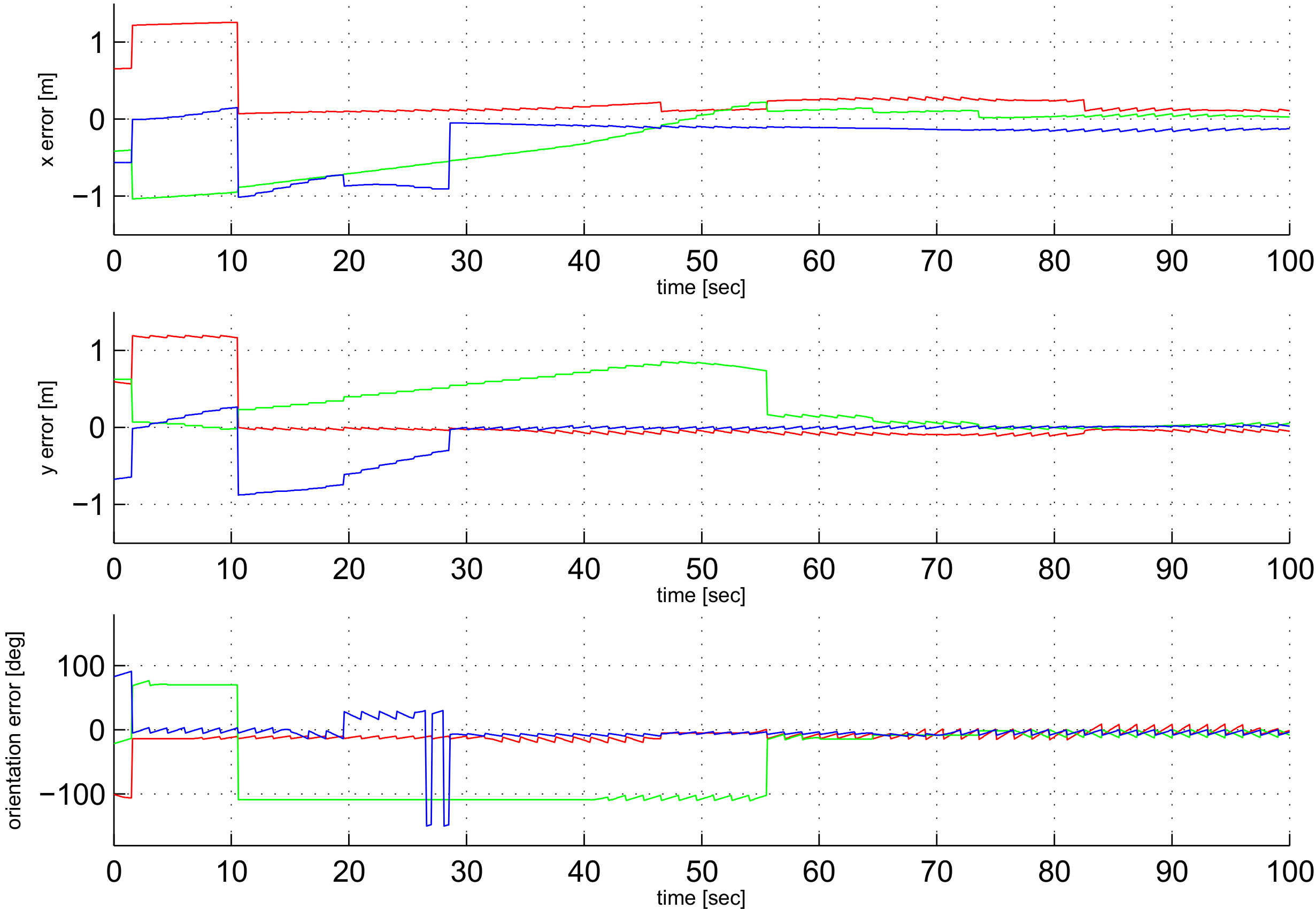

This framework was first validated into simulation, with the aid of Gazebo. Realistic 3D models of all robots were used as well as simulated noisy sensors that emulate the real ones used in the experiments.

A typical simulation starts with the UAV loitering above three UGVs. A position control keeps the flying robot still in hovering for all the simulation time.

The UGVs randomly move performing obstacle avoidance.

The wrong association of the UGVs brings to an error peak at the beginning of the simulation.

As soon as the ground robots start to move, the filter converges and the error goes almost to zero.

The estimates converge in less than one minute.

The final error is less than 12 cm for the position components while it is less than 10 degrees for the angular one.

As last remark, take in mind that the transient time is in function of the UGVs' velocities. In fact, the more different are the trajectories, the faster is the filter convergence time.

Since our UGVs move slowly (0.5 m/s of maximum speed), the transient time is around one minute.

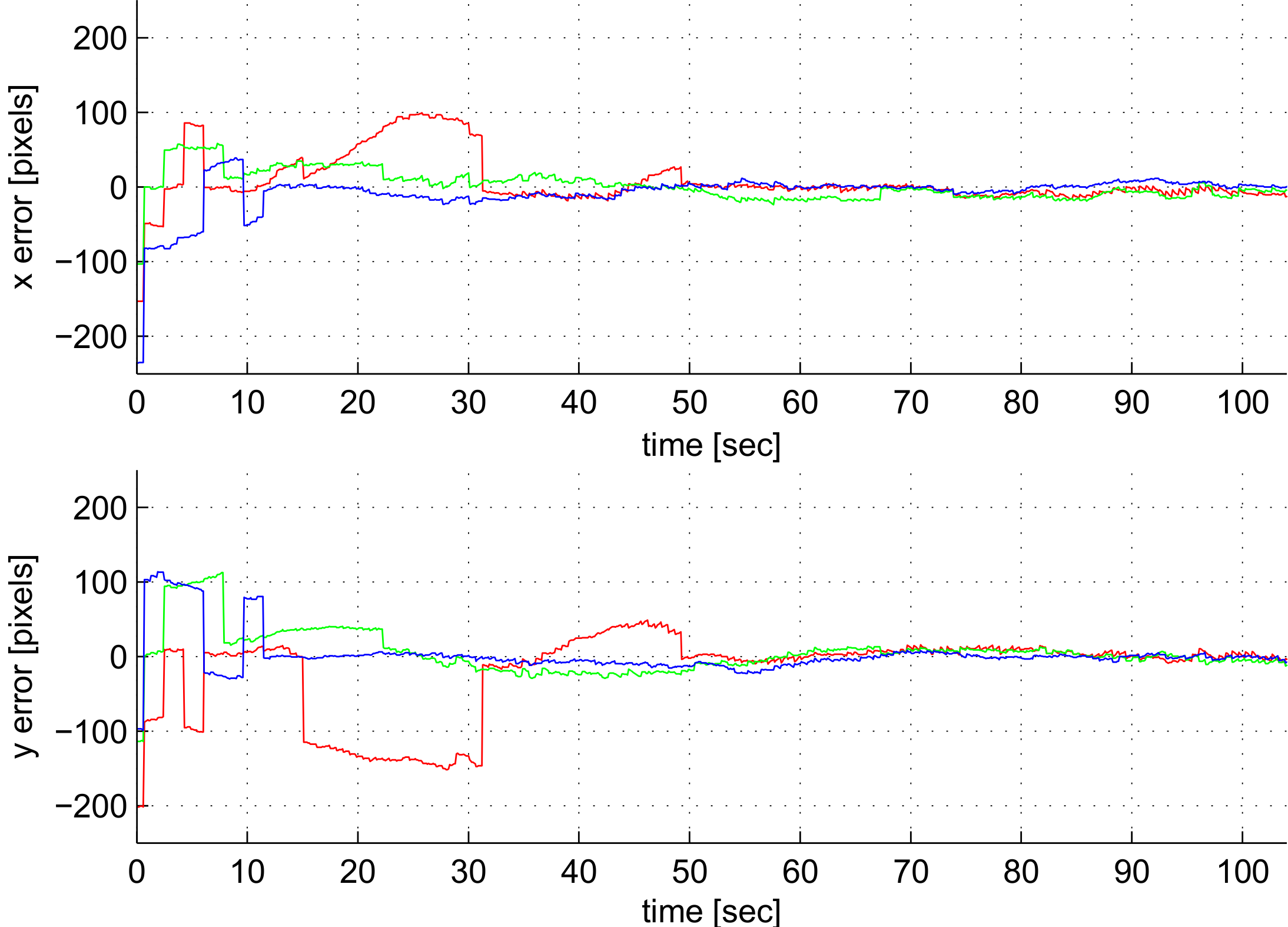

After testing the system in simulation, we performed some experiments with real robots.

Since our estimates are based on features positions in the image plane and we have not an external ground truth, we re-projected the estimated positions of the UGVs on the image plane and calculate the errors w.r.t. them.

As for the simulation case, the starting error peak is due to wrong knowledge about the identities of the UGVs, that have to be reconstructed.

After the transient initial phase (around 50 seconds), the algorithm converges and the errors remain bounded under 20 pixels (corresponding to about 10 cm) till the end of the experiment.

Since the diameter of our UGVs is 14 cm, the estimates lay into the rays of the robots.

Finally, the estimates 'shake' a little bit since we used the best particle guess for each estimate, that may vary over time.

It was proved that it gives better accuracy than the average, in presence of multiple hypotheses after the pruning phase.

The experiment with the heterogeneous system is shown integrally in the video below.

[1] P. Stegagno, M. Cognetti, L. Rosa, P. Peliti and G. Oriolo, Relative Localization and Identification in a Heterogeneous Multi-Robot System , 2013 IEEE International Conference on Robotics and Automation (ICRA 2013), Karlsruhe, Germany, pp. 1857-1864, 2013. (pdf).