The ASPICE Project

The ASPICE Project

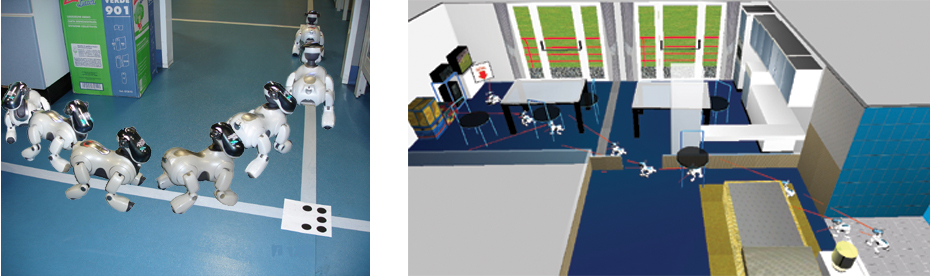

Assistive technology is an emerging area where robotic devices can be used to help individuals with motor disabilities achieve independence in daily living activities. The ASPICE project, funded by the italian foundation Telethon, is aimed at the development of a technological aid which allows neuromotor-disabled users to improve their mobility and communication within the surrounding environment by remotely controlling a set of home-installed appliances, including a Sony AIBO mobile robot.

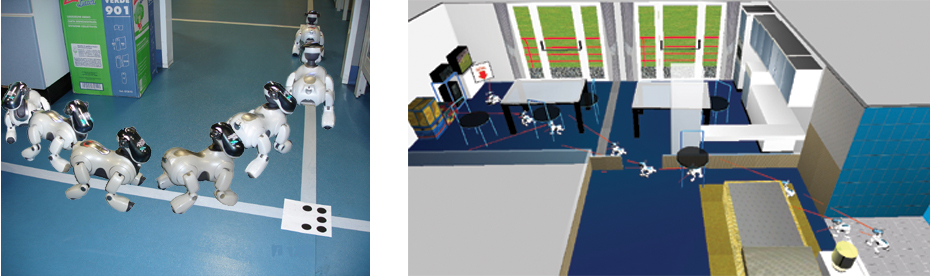

As one of the project partners, our group developed the navigation system for the robot, which can be driven in three modes, namely: single-step, semi-autonomous, and autonomous mode. The user is expected to choose single-step navigation for retaining complete control of the robot. In semi-autonomous navigation, the user simply specifies a main direction of motion, leaving to the robot the task of avoiding obstacles along that direction. To this end, artificial velocity fields are defined on a local occupancy grid built using measures from the AIBO head rangefinder. Finally, in autonomous mode, the user directly assigns a relevant target to the robot. To reach the target, the robot uses a visual roadmap, which is first approached and then followed up to the destination. Roadmap crossings are identified by means of a simple encoding mechanism.

Moreover, the video from the robot camera is fed back to the user for assisting him/her in driving the robot as well as for increasing his/her sense of presence within the environment. A GUI for head control has also been developed to allow the user to directly move the robot head and point the camera in any desired direction. Another feature of the system is a GUI for vocal requests, which has been included to improve the communication of the patient with the caregiver.

The proposed approach has been developed by A. Cherubini and G. Oriolo (with the help of S. Bruscino and F. Macrž).

For a quick introduction to the ASPICE project, see this paper presented at BIOROB'06. More details on the robot navigation system are given in this paper presented at ICRA'07. A detailed description of the ASPICE vision-based navigation mode is given in this paper presented at the First International Workshop on Robot Vision, VISAPP'07.

This clip shows a simulation realized within Webots, where the user tries to drive AIBO to a point in the environment (indicated by a red arrow) by giving a single direction command along the line joining the initial and final configurations. The semi-autonomous navigation module was able to accomplish the task in spite of the presence of obstacles.

The whole ASPICE system (including the multimode navigation module) is currently under clinical validation, in order to assess its performance through patient feedback. Have a look at these videos:

In experiments 1 (clip) and 2 (clip) the robot is driven in semi-autonomous mode: it avoids obstacles while performing respectively forward and lateral motion.

In experiment 3 (clip) the robot is driven in autonomous mode. In particular, it approaches the roadmap and follows it up to the first crossing; the robot then identifies the crossing, turns and proceeds towards the goal according to the its current plan. To have an idea of the difficulty of processing images acquired by AIBO CMOS camera in varying light conditions, look at the images below.

Experiment 4 (clip) is a typical experiment where the system is tested by using a mu-based Brain-Computer Interface (BCI) to drive the robot.

This clip, which accompanied the ICRA'07 paper, summarizes the system most interesting features.

NEW!!! In this clip, the robot is driven in semi-autonomous mode: a simple forward motion request has been sent by the user. The robot is able to avoid obstacles in a complex scenario.

NEW!!! In this clip, the robot accomplishes a simple domestic task by using all three ASPICE navigation modes. The video stream fed back by the robot camera during the experiment is also shown in the clip.

NEW!!! In these three clips, a P300-based BCI is used to drive the robot with the single step, hybrid (i.e., single step and semi-autonomous), and autonomous navigation modes.