|

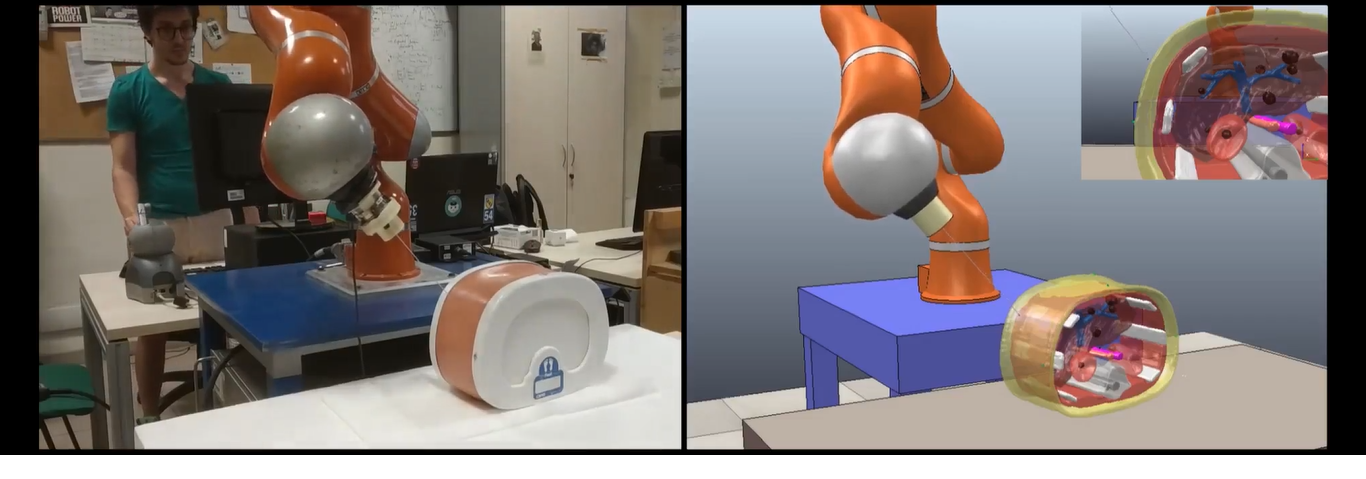

During teleoperated robot-assisted biopsies, force and visual feedback are fundamental to guarantee accuracy and safety in executing the task. To allow seamless introduction of the robot in the clinical flow, it is desirable to measure interaction forces without relying on dedicated, additional sensors. On the other hand, typical imaging systems do not offer a real-time 3D view of the remote site at the operator-side. In this project, we provide two contributions: i) a sensorless needle-tissue interaction model identification algorithm; ii) a modular framework, interfaced with a virtual environment, offering a complete visualization of the procedure at the operator-side. The identification algorithm is validated on an isinglass-based phantom, while the framework is tested through the CoppeliaSim (former V-REP) software.

More information about this topic can be found here. |

|

|

|

|

|

In this work, the problem of collision-free navigation of omnidirectional mobile robots in environments with obstacles is addressed. Information from a monocular camera, encoders, and an inertial measurement unit is used to achieve the task. Three different visual servoing control schemes, compatible with the class of considered robot kinematics and sensor equipment, are analysed and their robustness properties with respect to actuation inaccuracies discussed. Then, a controller is proposed with formal guarantee of convergence to the bisector of a corridor. The main controller components are a visual servoing control schemeand a velocity estimation algorithm integrating visual, kinematic and inertial information. The behaviour of all the considered algorithms is analised and illustrated through simulations both for a wheeled and a humanoid robot. The solution proposed as the most efficient and robust with respect to actuation inaccuraciesis also validated experimentally on a real humanoid NAO.

More information about this topic can be found here. |

|

|

|

|

The da Vinci Research Kit (dVRK) is a first generation da Vinci robot repurposed as a research platform and coupled with software and controllers developed by research users. An already quite wide community is currently sharing the dVRK (32 systems in 28 sites worldwide). The access to the robotic system for training surgeons and for developing new surgical procedures, tools and new control modalities is still difficult due to the limited availability and high maintenance costs. The development of simulation tools provides a low cost, easy and safe alternative to the use of the real platform for preliminary research and training activities. The Portable dVRK is based on a V-REP simulator of the dVRK patient side and endoscopic camera manipulators which are controlled through two haptic interfaces and a 3D viewer, respectively. The V-REP simulator is augmented with a physics engine allowing to render the interaction of new developed tools with soft objects. Full integration in the ROS control architecture makes the simulator flexible and easy to be interfaced with other possible devices. Several scenes have been implemented to illustrate performance and potentials of the developed simulator.

More information about this topic can be found here. |

|

|

|

|

|

After the last two decades of lively activity, research in Minimally Invasive Robotic Surgery (MIRS) is focusing on enhancing the degree of autonomy for procedures that are still executed by surgeons, through the introduction of autonomous robot operation modes. These procedures include suturing that, as repetitive and tiring task, usually increases the operation time, costs and complications risks. Automatic suturing.

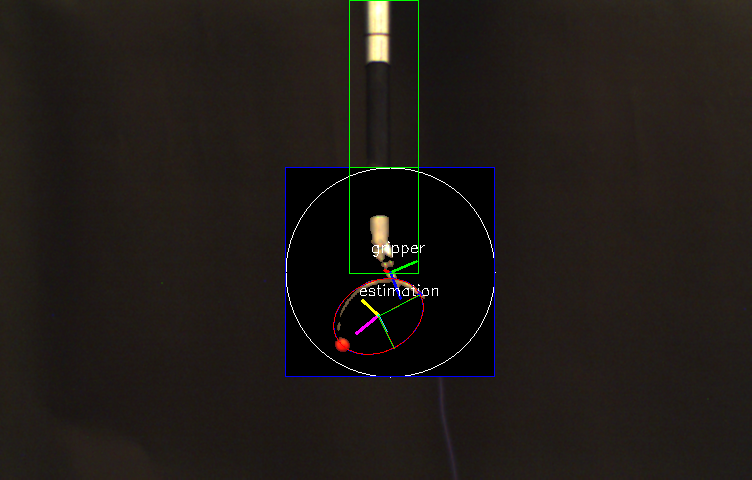

We propose an approach to needle detection and tracking based on Kalman filtering to combine visual information from a monocular camera with the robot kinematics. Beside providing a fast and reliable needle pose estimation, the proposed method is robust with respect to scene variations as in case of partially needle occlusion or of needle re-grasping operation, as well as external disturbances perturbing the needle pose. More information about this topic can be found here. |

|

The list of publications can be found here.